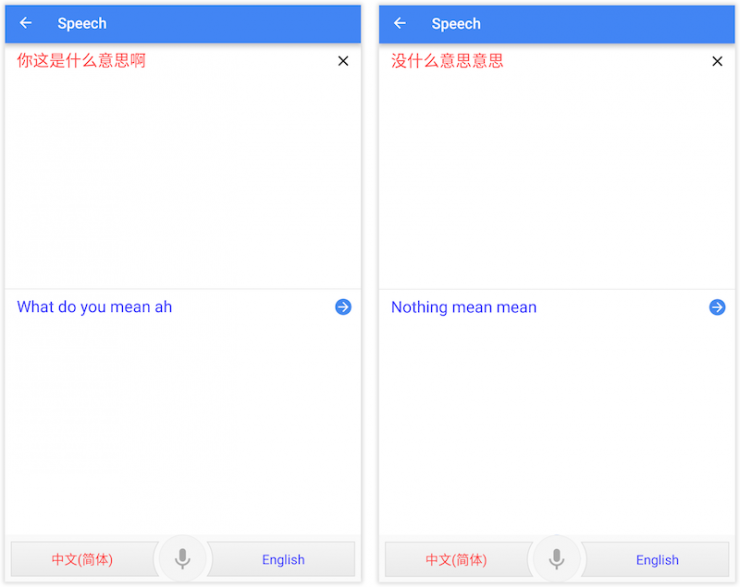

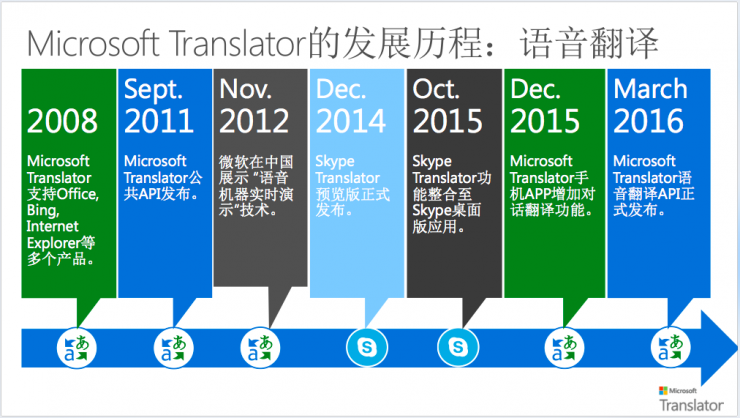

- What do you mean by this? - Nothing, meaning. A new translator who entered the job market worried that the increasingly powerful machine translation had grabbed his job and asked him to know, “Is there any future for this industry?†The old drivers came to comfort, one of them said. Young people, you rabbit-like rabbit forest broken, let the machine translate the above dialogue, see if you can get it, worry about it again. I don't know if "youth" has tried. Lei Feng network to try it out, found that the industry recognized the most powerful Google translator and Microsoft Translator (Microsoft Translator), indeed do not have this very Chinese characteristics of the voice dialogue. However, the performance of the two is not the same. The same paragraph of speech, this is the result of Google: This is the result of Microsoft: Specifically. Using voice translations, Google will retain all words in its entirety; Microsoft will omit the “mom†word in the first sentence and omit the “meaning†in the second sentence (which can be judged as false injury). why? Microsoft told Lei Feng that the reason for omitting “ah†and accidentally injuring one “meaning†was because they used a unique technique—TrueText—in speech translation. In an official document, Microsoft explains the role of TureText in this way: This process involves the removal of words that are not fluent (such as "ah" and "uh" and repeated wording), breaking text into sentences, adding punctuation, and case recognition. This is one of the optimizations made by Microsoft's speech translation technology for "verbal language." Olivier Fontana is director of Microsoft Translator product strategy at Microsoft Research. In a communication in mid-August, he told Lei Fengnet that we usually spoke differently from spoken and written expressions (verbal and written). TrueText Words that can be recognized by speech can be turned into more meaningful and understandable content by the machine. This technology is unique in the world. Because of the working relationship, the author of this article has to deal with a lot of interview shorthands every day. Although the text was “manually optimised†by the quick recorder, a lot of modal words were removed, but several consecutive complete sentences were found throughout the document, almost no. possible. In addition to prepared speeches, few people can use coherent sentences to express their own meaning when speaking. When a person is talking, it is inherently embarrassing, and it is “self-evident†if it is “with words in wordsâ€. It is even more difficult for the machine to understand people’s intentions and convert them into another language. TrueText technology is equivalent to using a machine to do collation of textual content. Many people think that speech translation is the process of first recognizing speech as text and then translating the text into another language. This is not the case. Olivier Fontana told Lei Fengwang (search for "Lei Feng Net" public concern) that Microsoft tried this simple splicing method, but the quality of translation was not satisfactory. In the end, Microsoft's solution is to make special input optimization for speech recognition at the speech recognition stage. The text after recognition is processed by TrueText technology and then entered into the text translation stage. The output of text translation should also be optimized for spoken language. Finally, the machine "reads it out" to translate the results through the mature TTS technology. Olivier Fontana said that Microsoft faces three key challenges in the process of developing a translation model for “real-time conversation scenariosâ€: Collect spoken language data. It takes a lot of time and money to collect colloquial corpus data. Train these corpus. The computational cost of this item is very high and requires a lot of computing power and hardware acceleration system. Develop spoken language models. The spoken language and pronunciation methods of each language are different. The voices, proverbs, speeds, and expressions of different languages ​​in different languages ​​of the same language are also different. It is difficult to achieve the same model. According to Microsoft, translation optimization for spoken language, especially for the optimization of chat conversation content, and TrueText technology, Microsoft is unique in the industry. Olivier Fontana said that traditional machine translation is based on more formal text translation, and Microsoft has added a large number of corpus based on spoken dialogue. He revealed that Microsoft even paid a lot of people to chat with Skype Translator, talking about their holidays, daily life, and collecting data as spoken language. Skype Translator's real-time voice translation technology has triggered industry attention. This is a “black technology†that allows two different languages ​​to communicate in real time through their native language (see video). Microsoft first demonstrated this technology at an academic event in Tianjin in 2012. In December 2014, the technology was commercialized in the Skype Translator application, and later applied to the desktop Skype Translator and the Microsoft Translator Mobile App. In the first half of this year, this technology ushered in a new milestone, Microsoft will open the API to everyone, to facilitate developers to integrate it into their own applications. The various optimizations made by Microsoft's translators for voice "dialogue" are easily reminiscent of the company's strategic "dialogue as a platform" announced at the beginning of the year's Build Conference. They also revealed that by the end of this year, Office 365 corporate users will be provided with Skype Meeting Broadcast service. With this service, captions can be automatically added to web conferences and the conferences can be translated into different languages ​​in real time. Note: This article used the title "Microsoft Translator: Others are still fighting for "written language", we have optimized for "verbal language"

Alarm Cables

Alarm Cables,Cable For Fire Retardant,Cable For Fire Alarm Circuit,Alarm Security Cable CHANGZHOU LESEN ELECTRONICS TECHNOLOGY CO.,LTD , https://www.china-lesencable.com