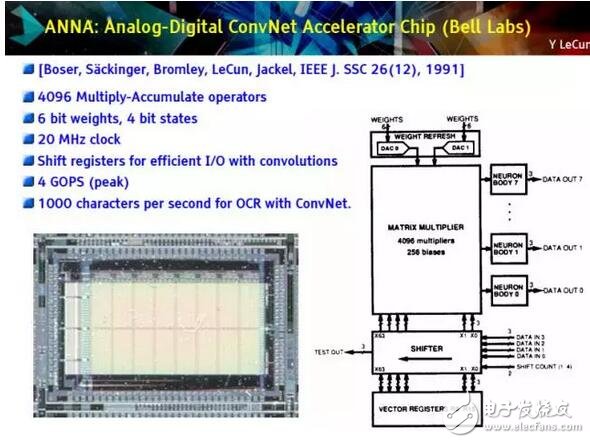

As a leader in machine learning, Yann LeCun (Yang Likun) developed an artificial intelligence chip called ANNA 25 years ago. Now, building computing chips for deep learning has become a common development goal for all technology giants. That was in 1992, LeCun also worked at Bell Labs, a research and development institution based in the suburbs of New York. Together with a group of researchers, he designed a chip ANNA for deep neural network computing to efficiently handle complex tasks that require large amounts of data to be analyzed, but ANNA has never been put on the market. Over the next two decades, with the continuous improvement of computer performance, neural networks began to reach or exceed human levels in tasks such as recognizing text, face and voice. But artificial intelligence is far from reaching the level of threat to human intelligence. Algorithms for specific tasks do not produce any meaningful results when performing other kinds of tasks. Despite this, today's neural network is still reshaping the face of all technology companies, Google, Facebook, Microsoft are doing their own changes. LeCun is now the director of the Facebook Artificial Intelligence Lab. There, neural networks are used to identify faces, mark things in pictures, translate languages ​​and more. After 25 years, LeCun believes that the market now needs chips like ANNA, and soon they will emerge in large numbers. Google has just had its own artificial intelligence chip TPU, which has been widely used in Google's data center to become the engine of its network empire. The Google Voice Search command for each Android phone is processed via the TPU. This is just the beginning of a huge revolution in the chip industry. CNBC and other media reported on April 20 that Google TPU developers are regrouping in the secret startup Groq to develop similar artificial intelligence chips; traditional chip makers such as Intel IBM and Qualcomm are doing the same. When Google launched the TPU earlier this month, it said: "It is our first machine learning chip." In a paper published by Google, the TPU can handle up to 15-30 of the NVIDIA K80 GPU and Intel Haswell CPU in some tasks. Times. In power consumption testing, the efficiency of the TPU is also 30-80 times higher than that of the CPU and GPU (of course, the comparison chip is not the latest product). Google TPU chip has become an important part of its data center Technology giants like Google, Facebook, and Microsoft can of course hand over their neural network tasks to conventional computer chips (such as CPUs), but the CPU is designed to handle all types of tasks, which is inefficient. When used with specially designed chips, neural network tasks run faster and consume less power. Google claims that with the use of TPU, it can create another 15 data centers for Google's cost savings. And with companies such as Google and Facebook using neural networks for mobile phones and VR helmets, small smart chips on personal devices have become imminent in order to reduce latency. "There is still a big gap in the market for more efficient professional chips," LeCun said. Technology giant After acquiring startup Nervana, Intel is building a machine learning chip. IBM is also creating a hardware architecture that can be mapped to a neural network design. Recently, Qualcomm has begun to manufacture a dedicated chip for executing neural networks. This news comes from LeCun, because Facebook is helping Qualcomm develop machine learning related technology, so he knows Qualcomm's plan very well; Qualcomm's technical vice president Jeff Gehlhaar confirmed the plan. He said: "We still have a lot to go in the development of prototypes." Qualcomm has been working with Yann LeCun's team at Facebook AI Research to develop new chips for real-time reasoning. Qualcomm recently announced plans to spend $47 billion to acquire Dutch automotive chip company NXP. Prior to the acquisition announcement, NXP was working to solve the problems of deep learning and computer vision. It seems that Qualcomm hopes to strengthen the development of automated driving systems with the acquisition. Autopilot is one of the main areas where deep learning and artificial intelligence work. Prior to this, the built-in chip had many other options to interact with the real world, such as mobile phones and virtual reality headsets. The current technology is developing rapidly, and we will soon see the emergence of other practical applications. Many companies want to seize this blue ocean opportunity, such as traditional chip giants Intel and IBM. While Big Blue is working hard to combine RISC chips with NVIDIA GPUs in its Minsky artificial intelligence server, its research team is also exploring other chip architectures. IBM Almaden Labs explored the performance of its brain-like chip TrueNorth, which has 1 million neurons and 256 million synapses. IBM claims that in several visual and voice data sets, TrueNorth gives a deep network close to the current highest classification accuracy. Dharmendra Modha, chief scientist of brain computing at IBM Research, said in his blog post: "The goal of brain-like computing is to give a scalable neural network substrate while constantly approaching the fundamental limitations of time, space and energy. " As the biggest player in the chip field, Intel has not stopped. It is also developing its own chip architecture based on the needs of the next generation of artificial intelligence work. Last year, Intel announced that its first artificial intelligence-specific hardware, Lake Crest (whose technology is based on Nervana), will be launched in the first half of 2017, and will be followed by Knights Mill, the next iteration of the Xeon Phi joint processor architecture. NVIDIA has become one of the main forces in the field of artificial intelligence hardware. Before companies such as Google and Facebook can use neural network translation languages, they must first train neural networks with special tasks and input a large number of existing translation data sets to the neural network. NVIDIA has created GPU chips that can speed up this training process. LeCun said that in terms of training, GPUs usually consider the market, especially NVIDIA GPUs. But the emergence of Farabet may indicate that NVIDIA, like Qualcomm, is also exploring chips that can run neural networks once they are trained. The original intention of the GPU was not artificial intelligence, but graphics. But about five years ago, companies such as Google and Facebook began using GPUs to train neural networks simply because GPUs were the best choice available. LeCun believes that GPUs can continue to do this. He said that programmers and companies are now familiar with GPUs, and they have all the tools to use GPUs; GPUs are hard to replace because they need to be replaced (the entire ecosystem). But he also believes that there will be a new type of chip that dramatically changes the way large companies run neural networks from both data centers and consumer devices, so everything from cell phones to smart lawn mowers to vacuum cleaners Will change. As Google TPU demonstrates, dedicated AI chips can take data center operations to a new level, especially for servers that require image recognition. When performing neural network tasks, they consume less power and generate less heat. "If you don't want a pond to boil, you need specially designed hardware," LeCun said. At the same time, with the development of VR and AR technologies, mobile phones and headsets also need the same chips. Facebook last week demonstrated their new augmented reality tools, which require a neural network to identify the surrounding environment. But the task of augmented reality systems can't be based on the data center—it takes time to transfer data, and latency can undermine the user experience. As explained by Facebook Chief Technology Officer Mike Schroepfer, Facebook is currently using GPUs and another device called a digital signal chip to handle these tasks. But in the long run, these devices must use a completely new type of chip. Now that demand has emerged, chip companies are vying to occupy new markets. China leading manufacturers and suppliers of Suzuki Brake Disc,FOR Suzuki Auto Brake Disc, and we are specialize inFOR Suzuki Car Brake Disc,FOR Suzuki Automobile Brake Disc, etc. Suzuki Brake Disc,Suzuki Auto Brake Disc,Suzuki Car Brake Disc,Suzuki Automobile Brake Disc Zhoushan Shenying Filter Manufacture Co., Ltd. , https://www.renkenfilter.com